One of the important problems today is the speech sound disorders that are a common impairment

in children. Currently, most interventions for speech sound disorders rely heavily on auditory skills -

i.e., people must listen to their own productions and modify them. Results have shown that the use of

auditory skills in solving speech sound disorders in children has a low success rate. In order to solve

this problem, researchers in linguistics are actively developing various speech production systems

capable of identifying the mistakes caused during speech, so as to provide real-time visual feedback

of tongue movements to analyze and perfect the tongue shape to acquire correct pronunciations and sounds.

Also, knowledge about the vocal tract is useful for providing more accurate data for acoustic modeling of

speech production, and for studying the mechanics of the vocal tract. In this regard, the shape and dynamics of

the human tongue during speech are crucial in the analysis and modeling of the speech production system.

auditory skills in solving speech sound disorders in children has a low success rate. In order to solve

this problem, researchers in linguistics are actively developing various speech production systems

capable of identifying the mistakes caused during speech, so as to provide real-time visual feedback

of tongue movements to analyze and perfect the tongue shape to acquire correct pronunciations and sounds.

Also, knowledge about the vocal tract is useful for providing more accurate data for acoustic modeling of

speech production, and for studying the mechanics of the vocal tract. In this regard, the shape and dynamics of

the human tongue during speech are crucial in the analysis and modeling of the speech production system.

Ultrasound imaging seems to be one of the most well-suited and common medical imaging modalities

used for acquiring sequences of tongue images during speech for several reasons. Ultrasound is non-invasive.

We can collect tongue surface scans with a transducer held under the chin. With a compressible standoff, the

tongue and jaw are free to make normal speech movements. Ultrasound is safe. It uses high-frequency (~5MHz)

sound, which poses no danger to the subjects in repeated trials. Ultrasound imaging is fast. A main advantage of using

ultrasound over other imaging modalities such as X-ray or MRI is that it is able to monitor the movement of the tongue

due to its high frame-acquisition rate (~30 frames per second). Also, it does not expose the subject to ionizing

radiation and can capture time-varying features in real time effectively.

sound, which poses no danger to the subjects in repeated trials. Ultrasound imaging is fast. A main advantage of using

ultrasound over other imaging modalities such as X-ray or MRI is that it is able to monitor the movement of the tongue

due to its high frame-acquisition rate (~30 frames per second). Also, it does not expose the subject to ionizing

radiation and can capture time-varying features in real time effectively.

Due to the high acquisition rate in ultrasound imaging, manual detection of tongue surfaces is extremely

tedious and time consuming. Due to the noise and unrelated high-contrast edges and regions within

the ultrasound images, automatic detection of the correct tongue surfaces is a challenging task.

In addition, the tongue's ability to attain complex shape during speech makes it difficult to track

as well.

In this research, we merged empirical properties of the tongue with machine learning algorithms

in order to make a robust and efficient tongue detection/tracking system. We also investigated methods for

localizing the region where the tongue appears within the ultrasound images. Also, we explored

ways to develop a mathematical model capable of tracking the detected tongue surface across the various ultrasound image

sequences.

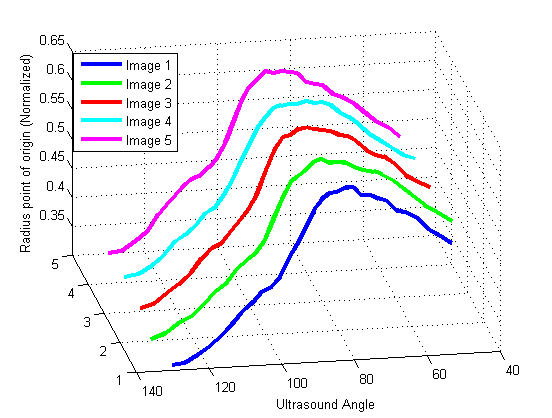

The video sequence on the top left shows a typical sequence of ultrasound images captured during speech. The graph

on the bottom right shows the detected tongue surface on multiple frames from this video sequence found using our

algorithm.

This work was a collaborative effort with Prof. Diana Archangeli in the

Dept. of Linguistics, College of Social and Behavioral Sciences,

and with Prof. Ian Fasel in the Dept. of Computer

Science, College of Science, University of Arizona.