A crucial element of any video is a moving object, something that we can clearly perceive to have changed its position with time. An immediate intellectual reaction to this observed motion is to determine how much the object has moved from one frame to the next with respect to its surroundings. Once the motion of the object is estimated, the logical progression is to track it from frame to frame, until the object leaves the area of interest or until the end of the video.

This motion estimation and tracking of an object is a crucial and complex problem for applications such as real-time video surveillance for intelligent home security systems, traffic monitoring ( including speed estimation of moving vehicles), tracking objects of interest in medical image sequences such as tracking the heart for quantification of volumetric blood flow, and tracking deformable objects in biological time-lapse video such as tracking fluorescent spots and moving cells to understand the underlying cell morphodynamics. This becomes especially challenging as we seek faster algorithms to perform the motion estimation and tracking in 3-D, and in environments with unpredictable lighting, noise, interference or occulusion by other objects.

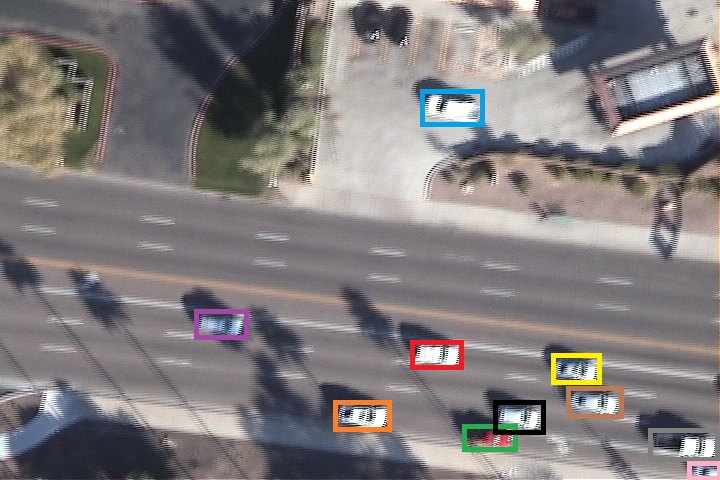

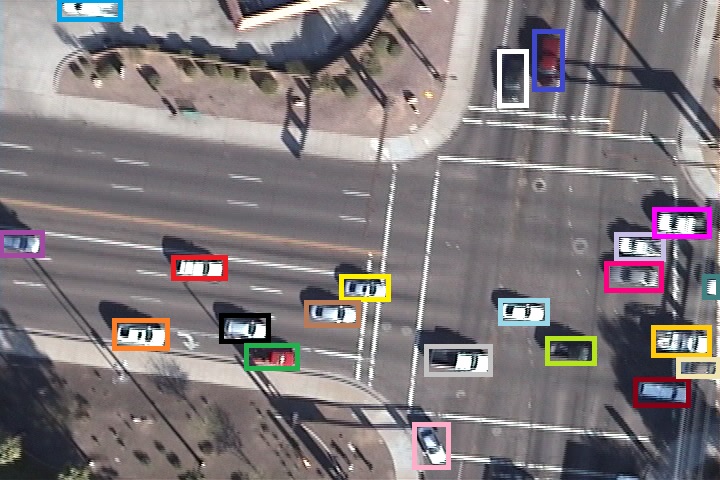

(a) time t=k (b) time t=k+1 (c) time t=k+2 (d) time t=k+3 (e) time t=k+4

(a) time t=k (b) time t=k+1 (c) time t=k+2 (d) time t=k+3 (e) time t=k+4

Figure 1: Motion estimation and tracking of cars on a roadway.

Traffic surveillance of vehicles on city roads and expressways is an application of great interest, as we can monitor vehicular traffic using aerial videos of important roadways. Accurate detection of moving vehicles and robust tracking from frame to frame can provide us with usable data such as speed estimation, speeding and moving violation monitoring, and rush hour and congestion statistics at intersections and busy roadways. An example of traffic monitoring systems is shown in Fig. 1, which shows individual frames from a video of moving traffic. New cars entering the frame will be detected automatically and added to the list of trackable moving objects for the tracking algorithm to track from frame to frame. This specific application deals primarily with translational motion of vehicles along what is presumed to be a straight road, with corresponding rotational motion as vehicles make turns or change lanes.

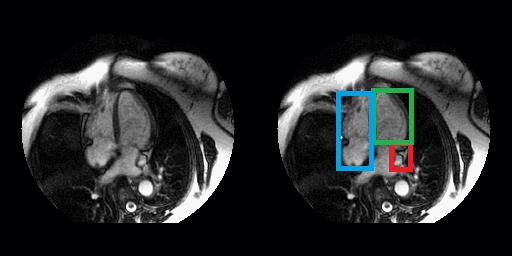

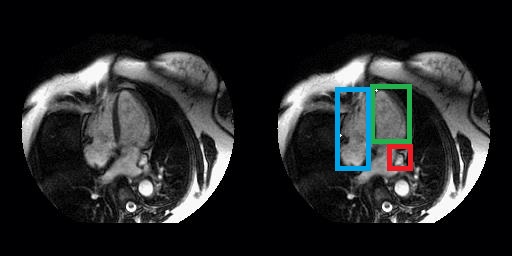

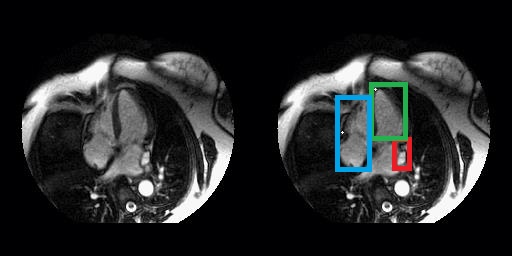

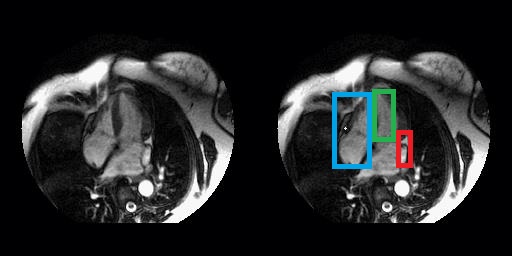

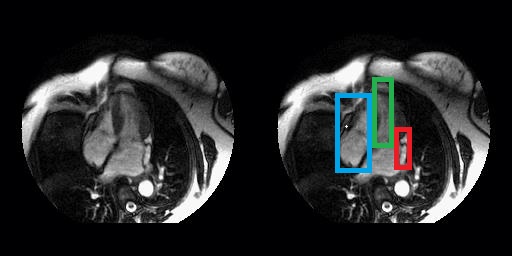

(a) time t=k (b) time t=k+1 (c) time t=k+2 (d) time t=k+3 (e) time t=k+4

(a) time t=k (b) time t=k+1 (c) time t=k+2 (d) time t=k+3 (e) time t=k+4

Figure 2: Motion estimation and tracking of chambers of the heart in MRI.

Cardiac magnetic resonance images are shown in Fig. 2, wherein we observe five different time slices that depict the heart pumping blood into the circulatory system. Tracking the movement of the heart enables us to determine the volume of blood being pumped by the heart as well as the temporal signature of the cardiac cycle. This information could be vital in detecting cardiac dysrhythmia and cardiac output. The images are taken while the patient is stationary, and are also registered with each other to negate any translational motion artifacts if present. This application mainly deals with rotational motion, as there is negligible translational motion.

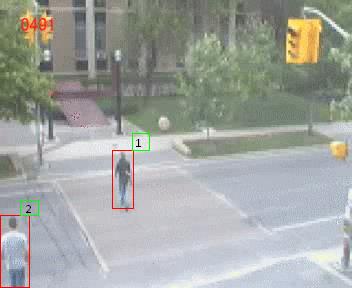

(a) time t=k (b) time t=k+1 (c) time t=k+2 (d) time t=k+3 (e) time t=k+4

(a) time t=k (b) time t=k+1 (c) time t=k+2 (d) time t=k+3 (e) time t=k+4

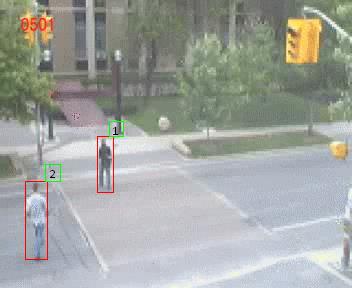

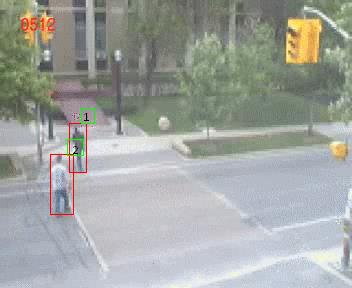

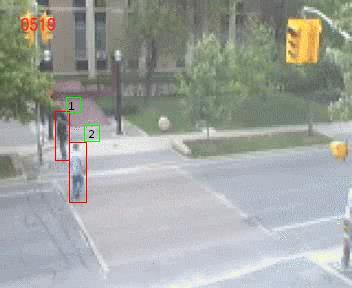

Figure 3: Motion estimation and tracking of people walking on a street.

Visual surveillance systems, such as home security systems, monitor moving objects and people and are responsible for detecting anomalous behavior or trespassers. Fig. 3 shows five frames from a home security surveillance system that tracks people entering and exiting the field of view. This application equally involves both translational and rotational motion.

Publications:

-

Rohit C. Philip, Sundaresh Ram, Xin Gao, and Jeffrey J. Rodriguez, "A Comparison of Tracking Algorithm Performance for Objects in Wide Area Imagery," in 2014 IEEE Southwest Symp. on Image Analysis and Interpretation, April 6-8, 2014, San Diego, CA, pp. 109-12. [ PDF ]